SPS Tech Summit Challenge

SPS Tech Summit and SPS Tech Jam are two tech events organized by SPS Commerce annually. These events allow SPS employees to gain new knowledge as well as share their knowledge with others on different technology topics. This year, the organizers have decided to host a competition named “The AWS DeepRacer Challenge”, which runs in parallel to the above two events.

SPS employees can team up, learn about AWS DeepRacer and Machine Learning and work together for the glory. Our team is from SPS Commerce Melbourne office, and we had our first competition during the SPS Tech Jam event held in July 2020.

There are three types of AWS DeepRacer races (time trail, object avoidance, head-to-head) and this competition is a time trial race. Whoever completes the track within the shortest time wins. There were seven laps and the fastest lap was considered when ranking the players.

All our team members are beginners to Machine Learning as well as to the DeepRacer. So, while working on this challenge we learned a lot of new things. The aim of this article is to share that knowledge and introduce the basics of Machine Learning and Amazon’s DeepRacer, specially for a fresher to the field.

Machine Learning

If you google the term “Machine Learning”, you will find different definitions with many complex words. But if you are a beginner to the field, here is what Machine Learning means in very simple words.

“Machine Learning is the process of teaching a machine to do a specific task on its own through examples, experiences and patterns.”

If we think about it, this is the same way a human brain learns things. When you see a dog, you will identify it as a dog because you have seen examples of dogs before. You know it hurts when you cut yourself with a knife by experience. If you are given a fruit basket with different fruits, you can group them based on the features of each type of fruit. In the same way a machine too can learn.

There are three types of Machine Learning.

Supervised Learning - The machine learns from a set of labeled example data (training data). For instance, we can feed the machine a set of images of dogs along with their corresponding label as “dog”. Once the learning phase completes, the machine can then separate “dog” and “non-dog” images from a given set of unseen images.

Unsupervised Learning – In this method, the machine does not get any labeled data. Rather, the machine tries to divide objects into groups based on hidden features. For instance, a machine is fed with a pile of “fruit” images and the task is to separate the images into groups. There are no teachers and the machine tries to find any patterns on its own.

Reinforcement Learning – In this way of learning, the machine acts within an environment and learns with the experience gained. Each time the machine performs an action, it will get a reward based on the validity of that action. The machine’s goal is to perform actions so that it gets the maximum reward. This is similar to how we train a dog. We give a dog a treat if it does the behavior we’re seeking to train (sitting, for example) and no treat if it doesn’t. After some time, the dog learns to differentiate the good from the bad such that it receives more treats.

Now let’s discuss about the AWS DeepRacer and its connection with Machine Learning.

AWS DeepRacer

AWS DeepRacer is an autonomous 1/18th scale race car developed by AWS. It has a virtual version where the car drives in a virtual environment in AWS DeepRacer Console as well as a physical version where the car runs on a physical track. It sees and feels its environment via cameras and a LiDAR attached to the car.

How do we teach this machine (car) to drive autonomously? This is where Machine Learning comes into play. Among the three types of Machine Learning we discussed above, AWS DeepRacer uses Reinforcement Learning to enable autonomous driving. Let’s discuss how the car learns with Reinforcement Learning.

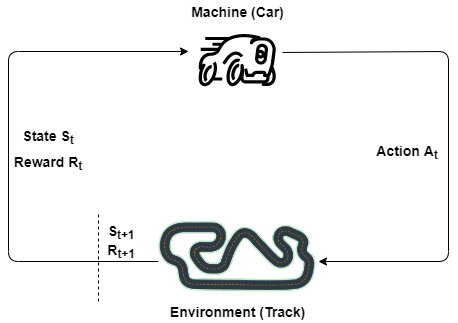

Reinforcement Learning in DeepRacer

From the sensors attached to the car, it receives information about its environment (the track) such as images from cameras, objects on the track from the LiDAR, etc. In other words, the car gets the “state” of the environment through its sensors.

Based on this “state”, it performs an “action” that it thinks is the best one. It chooses an “action” from a predefined “action space”. An “action” in the “action space” is associated with a steering angle and a speed. An example “action” may look like “Turn 30 degrees with 0.4 m/s speed”, “Turn -15 degrees with 0.8 m/s”, “Go straight with 0.8 m/s speed”, etc.

After each “action”, the car gets a “reward” for the action it just performed. If the action is the best one for the particular state, it gets a higher reward and if the action is wrong, it gets a lower reward. This sequence of events repeats all throughout the training process of the car. Even though these actions taken by the car are random at the beginning, after some time the car learns and tries to take the actions which maximize its reward.

Now the question is how to reward the car. For that we have something called a “Reward Function”.

Reward Function

Writing a Reward Function is a challenging task. The Reward Function’s goal is to reward the car based on the actions it takes. If our reward function is not rewarding the car correctly, we cannot expect the car to choose the best actions when it runs on the track. We can apply the same example of training a dog here. If we do not treat the dog correctly for the actions it takes, it does not learn to differentiate the good from the bad. So, rewarding the car is very important. Let us see how we can do that.

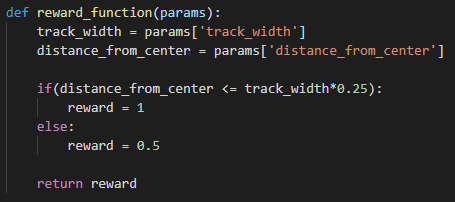

AWS DeepRacer gives us the “state” of the environment as a set of parameters. This set of parameters is the input for our Reward Function. There are 23 such parameters and you can find details about all these parameters in the Developer Guide for AWS DeepRacer. We will discuss about the following two parameters just to give you an idea about how to use these parameters to write a Reward Function. AWS DeepRacer Console allows you to write the Reward Function in Python.

- track_width – width of the track

- distance_from_center – distance to the car in meters from the track center

Using these two parameters we can calculate how close the car is to the center of the track.

If distance_from_center <= track_width*0.25 that means the car is closer to the center line of the track.

If distance_from_center > track_width*0.25 that means the car is far away from the center.

Based on these two conditions we can write our Reward Function to give a higher reward if the car is closer to the center line and a lower reward otherwise. If we write this in a simple Python code, it would look like this.

AWS DeepRacer Console

AWS DeepRacer Console has a wizard-like process that we can follow to create a model. This console is quite self-explanatory. So, we are not going to discuss that in detail. We will just have a look at the basic steps to create a Reinforcement Learning model.

- Give your model a name with a description and choose a track out of the list of tracks to train your model on.

- Choose the race type you want to use for training your model. There are 3 types of races that AWS offers.

- Time trial – Car races against the clock. No obstacles.

- Object avoidance – Car races on a track with obstacles.

- Head-to-head – Car races against other moving cars.

- Next step is to choose a car. There is a section called “Garage” in AWS Console and you can create your own cars with customized specifications there and choose them to train the model. If you do not want a customized car, choose the default one.

- Create the Reward Function

- Choose values for hyperparameters. Hyperparameters are a set of parameters that are used by the algorithm to train the model. Your model trains well if you set optimal values to them. Details about hyperparameters and how to set values to them are explained in AWS DeepRacer Developer Guide.

- Set training time. You can train your model for a time period from 5 minutes to 24 hours. It is up to you to decide for how long your model needs to be trained.

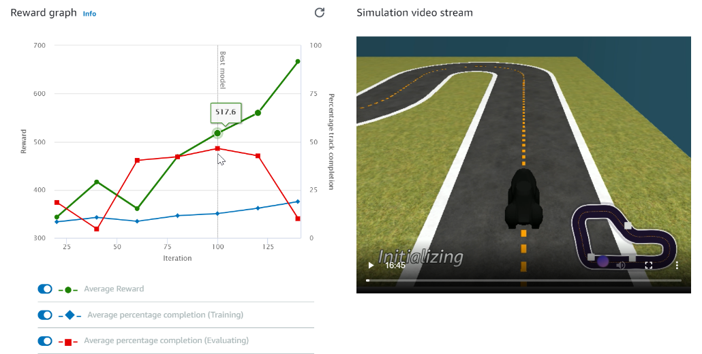

Once you have completed all the above steps, your model starts its training phase. This training process happens with a virtual car on a virtual track. During the training process you can see a livestreaming video of how the car performs on the track. And there is a Reward Graph which gives you an idea about how the car is being rewarded for its actions.

After the training has completed, you can evaluate your model against different tracks to see how good your model performs. If you have the physical car, you can download the model you trained and upload it there. Then you can evaluate your model by running your car on a physical track.

Training Tips

This section provides some tips we learned during the training process of our model.

Start with a simple reward function – Even though you have many ideas for the reward function at the beginning, always start with the simplest one and then improve gradually. If your model is not performing well, a complex reward function makes it hard to figure out what went wrong. But if you develop the reward function from a simple one, it is easy to identify the issues and fix them as you go on.

Try to watch the livestreaming video while training and evaluating – Watching the livestreaming video of the training and evaluation process allows you to observe the actions that the car takes at different points of the track. Observing those actions is helpful to improve the reward function. For instance, if you observe the car goes on a zig-zag path, you can improve your reward function in a way that it rewards the car higher if it goes on a straight line.

Clone the model and train for different tracks – Training the model on the same track over and over, makes that model overfit to that specific track. If that happens, your model might not perform well on other tracks. To avoid that, you can clone the model you trained for a certain track and train it further on a different track.

Training a Reinforcement Learning model like this on your own can be a challenging task, especially if you are a beginner to the field. But AWS DeepRacer makes this easier by integrating the required components together and providing the steps to follow on the AWS DeepRacer Console. You can just learn the basics about Reinforcement Learning and jump straight into it. While you get your hands dirty with the work, your knowledge improves. That is why AWS DeepRacer is an ideal starting point to learn about Machine Learning.

We hope this post will help you to get a kick-start on your own AWS DeepRacer. Good Luck! Sachintha Gamlath